We're not far from the day when artificial intelligence will provide us with a paintbrush for reality. As the foundations we've relied upon lose their integrity, many people find themselves afraid of what's to come. But we've always lived in a world where our senses misrepresent reality. New technologies will help us get closer to the truth by showing us where we can't find it.

From a historical viewpoint(Opens in a new window), we've never successfully stopped the progression of any technology and owe the level of safety and security we enjoy to that ongoing progression. While normal accidents do occur and the downsides of progress likely won't ever cease to exist, we make the problem worse when trying to fight the inevitable. Besides, reality has never been as clear and accurate as we want to believe. We fight against new technology because we believe it creates uncertainty when, more accurately, it only shines a light on the uncertainty that's always existed and we've preferred to ignore.We Already Accept False Realities Every Day

The dissolution of our reality—a fear brought on by artificial intelligence—is a mirage. For a good while, we've put our trust in what we see and hear throughout our lives, whether in the media or from people we know. But neither constitutes reality because reality has never been absolute. Our reality is a relative construct. It's what we agree upon together based on the information we gain from our experience. By observing and sharing our observations we can attempt to construct a picture of an objective reality. Naturally, that goal becomes much harder to achieve when people lie or utilize technology that makes convincing lies more possible. It seems to threaten the very stability of reality as we know it.

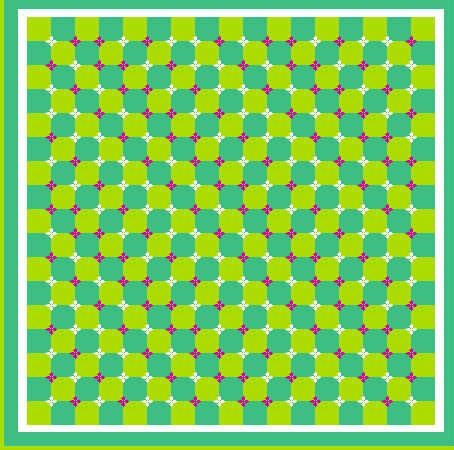

But our idea of reality is flawed. It's comprised of human observation and conjecture. It's limited by how our bodies sense the world around us and how our brains process that acquired information. Although we may capture a lot, we can only sense a sliver of the electromagnetic spectrum(Opens in a new window) and even that constitutes too much for our brains to process at once. Like the healing brush in Photoshop(Opens in a new window), our brains fill in the gaps in our vision(Opens in a new window) with its best guess at what belongs. You can test your blind spots(Opens in a new window) to get a better idea of how this works or just watch it in action by looking at an optical illusion like this:

This, among other cognitive processes(Opens in a new window), produces subject versions of reality. You already cannot experience every aspect of a moment, and you certainly won't remember every detail. But on top of that, you don't even see everything you see. Your brain constructs the missing parts, hides visual information(Opens in a new window) (especially when we're moving(Opens in a new window)), makes you hear the wrong sounds(Opens in a new window), and can mistake rubber limbs for your own(Opens in a new window). When you have a limited view of any given moment and the information you obtain isn't fully accurate, to begin with, you're left with a subjective version of reality than you're able to gauge. Trusting collective human observations led us to believe geese grew on trees for about 700 years(Opens in a new window). Human observations, conclusions, and beliefs are not objective reality. Even in the best of circumstances we will, at times, get things extraordinarily wrong.

Everything you know and understand passes through your brain and your brain doesn't offer an accurate picture of reality. To make matters worse, our memories often fail us in numerous ways(Opens in a new window). The way we see the world is neither true or remotely complete. So, for a long time, we have relied on other people to help us understand what's true. That can work just fine in many situations, but sometimes people will lie or have vastly different versions of the same situation due to past experiences. Either way, problems occur when subjective observations clash and people cannot agree upon what really happened. Technology has helped us improve upon that problem—technology we widely feared during its initial introduction.We Either Trust or Distrust Technology Too Much

Throughout time we've created tools to help us survive as a species. By developing new tools we've been able to spread information more easily and create a sense of trust. Video and audio recordings allowed us to bypass the brain's processes and record an un-augmented record of an event—at least, from a singular point of view. A video camera still fails to capture the full reality of a given moment.

For example, imagine someone pulls out a knife in a fight and fakes a swipe to try and frighten their attacker without any intention of doing actual harm. Video surveillance paints a different picture without this context. To an officer of the law, the security footage will show assault with a deadly weapon. With no other evidence to provide context, the officer has to err on the side of caution and make an arrest.

Whether or not such assumptions lead to less crime or more questionable arrests doesn't change the fact that an objective recording of reality misses information. We trust recordings as truth when they only offer a part of the truth. When we trust video, audio, or anything that cannot tell the full story, we put our faith in a medium that lies by omission by design—just like any observer of reality.

Faults exist in technology but that doesn't offer cause to discard it. Overall, we've benefited from advancements that allowed objective recordings of the world around us. Not all recordings require additional context. A video of a cute puppy might not be cute to everyone, but—for the most part—people will agree they're seeing a puppy. Meanwhile, we used to call the sky green(Opens in a new window) and can't agree on the color of a dress in a bad photograph(Opens in a new window). As technology progresses and becomes accessible to more and more people, we all begin to learn when and how it can paint reality with a less accurate brush than we liked to believe.

This realization causes fear because our system of understanding the world starts to break down. We can't rely on the tools we once could to understand our world. We have to question the reliability of the things we seen recorded and that goes against much of what we've learned, experienced, and integrated into our identities. When new technologies emerge that further erode our ability to trust what's familiar they incite this fear which we tend to attribute to the technology rather than ourselves. Phone calls are a normal part of life but they were, initially, seen as an instrument of the devil(Opens in a new window).

Today, AI enjoys similar problems. Deepfakes(Opens in a new window) stirred a panic when people began to see how easily a machine could swap faces in videos with startling accuracy—with numerous quality video and photos that met specific requirements. While these deepfakes rarely fooled anyone, we all got a glimpse of the near future where artificial intelligence would progress to a point where we'd fail to know the difference. That day came last month Stanford University, Princeton University, the Max Planck Institute for Informatics, and Adobe released a paper(Opens in a new window) that demonstrated an incredibly simple method of editing recorded video to change spoken dialogue both visually and aurally that fooled the majority of people who saw the results. Take a look:

Visit the paper's abstract(Opens in a new window) and you'll find most of the text dedicated to ethical considerations—a common practice nowadays. AI researchers can't do their jobs well without considering the eventual applications of their work. That includes discussing malicious use cases so people can understand how to use it for good purposes and allow them to also prepare for the problems expected to arise as well.

Ethics statements can feed public panic because they indirectly act as a sort of a vague science fiction in which our fearful imaginations must fill in the blanks. When experts present the problem it's easy to think of only the worst-case scenarios. Even when you consider the benefits, faster video editing and error correction seem like a small advantage when the negatives include fake news people will struggle to identify.We Only Lose When We Resist Progress

Nevertheless, this technology will emerge regardless of any efforts to stop it. Our own history repeatedly demonstrates that any efforts to stop the progression of science will, at most, result in a brief delay(Opens in a new window). We should not want to stop people who understand and care about the ethics of what they create because that leaves others to create the same technology in the shadows. What we can't see might seem less frightening for a while, but we have no way of preparing, understanding, or guiding these efforts when they're invisible.

While technologies like the aforementioned text-based video editor will inevitably lead both to malicious uses and more capable AI in the future, we already fall victim to similar manipulations on a daily basis. Doctored photos are nothing new and manipulative editing showcases how context can determine meaning—a technique taught in film school(Opens in a new window). AI adds another tool to the box and increases mistrust in a medium that has always been easily manipulated. This is unpleasant to experience, but ultimately a good thing.

We put too much trust in our senses and the recordings we view. Reminders of this help prevent us from doing that. When Apple adds attention correction to video chats(Opens in a new window) and Google actually makes a voice assistant that can make phone calls for you we will need to remember that what we see and hear may not accurately represent reality. Life doesn't require accuracy to progress and thrive. Pretending we can observe objective reality does more harm than accepting we can't. We don't know everything, our purpose remains a mystery to science, and we will always make mistakes. Our problem is not with artificial intelligence, but rather that we believe we know the full story when we only know a few details.

As we enter this new era we should not fight against the inevitable technology that continues to shine a spotlight on our misplaced trust. AI continues to demonstrate the fragility of the ways we conceive of reality as a species at a very rapid pace. That kind of change hurts. We lose our footing upon realizing we had only imagined the stable ground we've walked upon our entire lives. We seek a new place of stability as we tumble through uncertainty because we see the solution as the problem. We may not be ready for this change, but if we fight the inevitable we will never be.

Artificial intelligence will continue to erode the false comforts we enjoy, and that can be frightening, but that fear is also an opportunity. It provides us with a choice: to oppose something that scares us or attempt to understand it and use it for the benefit of humanity.

Now read: Top image credit: Getty Images