AI runs smack up against a big data problem in COVID-19 diagnosis

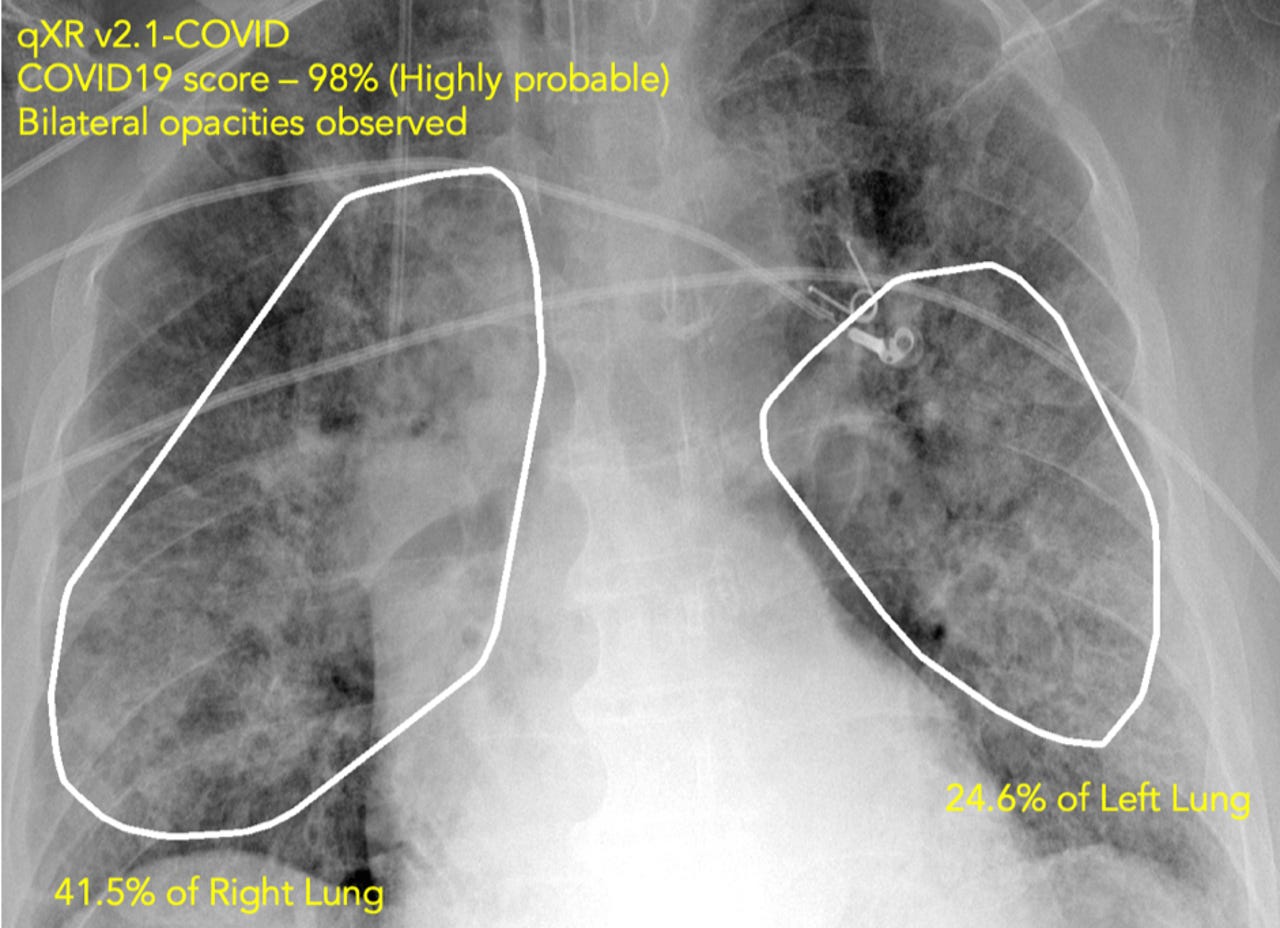

A chest X-ray, analyzed by Qure.ai's software, picks up on abnormalities that suggest the likelihood of COVID-19 infection. X-rays are one of the quickest, simplest ways to diagnose the disease, and an army of AI specialists around the world are trying to speed up how the images are used to find cases. Most cite the lack of data as the prime obstacle to broader adoption of AI.

For all the frantic effort to coordinate life-saving work around the globe during the COVID-19 pandemic, the digital age finds itself hampered in one very specific respect: information. Teams of artificial intelligence researchers are trying to bring decades of technology to bear on the problem of diagnosing and treating the disease, but the data they need to develop their software programs is scattered around the globe, making it practically inaccessible.

The painful lack of data is evident in one particular use case for AI, the development of diagnostic tests for COVID-19 based on X-rays or on "computed tomography" scans of the lungs.

While definitive tests for the disease are genetic tests, called "RT-PCR," those tests have been in notoriously short supply in many parts of the world including the U.S. An alternative is an X-ray or CT scan. X-rays in particular are widely available throughout the world, and the results come back much quicker than RT-PCR. There's a common belief that CT scans are more "sensitive" than RT-PCR, a potential advantage of using them.

Analyzing X-rays and CTs takes time, so numerous scholars around the world have put together so-called deep learning neural networks that can compute whether there are anomalies in the scans. The idea is to ease the burden of radiologists suddenly inundated with COVID-19 patients. Triaging the scans as a kind of first pass can yield a preliminary analysis, and that can place priority cases at the head of a radiologist's workflow.

The AI miracle?

Articles have circulated describing amazing successes, particularly in China, of a number of AI diagnosis projects, including Chinese software maker Infervision, Chinese insurance firm Ping An's healthcare division, Chinese search giant Alibaba, and Chinese tech startups Deepwise Technology and Iflytek. Media stories make it sound as if AI is a miracle technology that can be turned on and start churning out diagnoses.

The reality is less exhilarating. Despite some success, numerous efforts face challenges, with the biggest issue being the access to data. Especially with a novel disease such as COVID-19, which is distinct from other pulmonary infections, the presence of distinguishing features isn't always conclusive. The analysis needs to be tweaked for the novel condition.

"We see great potential of this technology, but they are slow in actual deployment," according to Wei Xu of the Institute for Interdisciplinary Information Sciences at Tsinghua University in Beijing, China, who answered questions from ZDNet in an email.

Xu is with a team of over 30 researchers who built a deep learning system to read CT scans that was deployed in 16 hospitals in China, including in Wuhan, and that achieved a rate of 1,300 screenings per day. That initial success has come up against the reality that it can be hard to move forward in some countries. With the situation in China having abated, "We are in a progress of deploying the system in Europe, but the process has been slow," wrote Xu.

Help the radiologists

It's simple in theory to identify what a computer should look for. An X-ray or a CT scan will show formations in the lung that are associated with a number of respiratory conditions including pneumonia. The feature in an image most often linked to a COVID-19 case, although not exclusive to COVID-19, is what's called "ground-glass opacity," a kind of haze hovering in an area of the lung, caused by a build-up of fluid. Opacities and other anomalies can show up even in asymptomatic COVID-19 patients.

What slows things down is that neural networks have to be tuned to pick out opacities in the pixels of a high-resolution image, and that takes data. It also takes time working with physicians who know what to look for in the data. Both data and expertise are in short supply at the outset of a pandemic.

The neural network programs that Xu and others are deploying have been refined by computer scientists to a high degree of sophistication over many years and they are providing ready tools with which to build new systems. The system that Xu and team built combines two deep learning neural networks, a "ResNet-50," the standard for many years for image recognition, and something called "UNet++" that was developed at Arizona State University in 2018 for the specific purpose of processing chest CT scans.

The neural networks, like most of these kinds of applications, use a process called convolutions that is the bedrock of all image recognition systems. A convolutional neural network summarizes repeated motifs in the data. By summarizing the summaries, if you will, in successive levels of abstraction, a neural network forms a mathematical measurement of whether pixels of an image contain an anomaly, such as the opacity.

A data problem, a really big data problem

The theory is solid, but in practice, things became challenging. "AI systems requires lots of labels," Xu explained via email. Labels are the annotations of images created by human radiologists that tune the settings of the neural net to properly summarize the pixels of data. "And labeling require physician time, which is hard to get," adds Xu.

Wei Xu of Tsinghua University and colleagues at other Chinese institutions built an AI system that not only analyzes CT scans but also integrates with a radiologist's workflow. Trying to bring the system to Europe has been slowed by various factors including lack of access to data, says Xu.

Xu and team had to design an entire "pipeline" for COVID-19 to make it easy for a non-specialist, in collaboration with a trained radiologist, to apply labels to images to bootstrap the training of the network. The system was fed 1,136 cases from Chinese hospitals, 723 of which were positive for COVID-19. Other cases had other kinds of pulmonary infections, such as conventional pneumonia, so that the program's convolutions would be tuned to differentiate between ground-glass and other features of COVID-19 as distinct from features in other conditions.

Despite all that work, and despite some success in deployment, getting further data to refine the program was challenging, said Xu. "It is difficult for AI experts to understand what physicians really needs."

Also: Some US communities will 'open up' from COVID-19 lockdown before others, says Rep. Bera

"Designing such AI requires deep understanding about both computer science and medical practice, and such students are difficult to train," said Xu. As a result, "I do not believe AI will replace human physicians in any near future, and thus AI will need to work with physicians."

That pipeline that integrated the AI with the radiologist's workflow "is the most valuable part of the an AI-assisted diagnosis system, and also the most time-consuming and challenging module to build," Xu told ZDNet. In contrast, the Infervision system, he said, "runs as an alternative process that screens the CT images independent of radiologists."

It's possible that neural nets that don't require labels could help ease the data burden, so-called unsupervised learning. Unsupervised training of a neural network finds motifs by composing summaries of the data without any annotations from people.

But at this stage, that's more an exotic research direction than a practical avenue, said Xu. "Without any labels to direct the learning, [it's] just like asking you, 'how do you describe water?'" said Xu. "There are just too many dimensions to describe it, and it is really hard to find a precise description."

Aiming for fully automatic AI

Some researchers are, indeed, trying to do away with labeling, and the results are mixed.

Jie Tian, the director of the CAS Key Laboratory of Molecular Imaging at the Chinese Academy of Sciences in Beijing, has worked with colleagues at other institutions to develop a system that does away with human annotations, what they call "fully automatic."

They took a form of convolutional network developed in 2018 called a "DenseNet," which has a greater number of combined summations of pixels at multiple levels of abstraction.

The pipeline of a "fully automatic" Ai systems developed by Jie Tian of the Chinese Academy of Sciences in Beijing and colleagues at collaborating institutions. It can remove some of the work of radiologists to annotate CT scans. Because of the current lack of a larger dataset to optimize the program, the software may remain a research projects for the time being.

Tian and colleagues trained the network in two stages. First, they input 4,016 scans of people with lung cancer, so that the network is tuned to create summaries that reflect lung abnormalities generally. They then input a second data set of 1,266 patients confirmed for COVID-19, so that the summaries obtained in the first stage would become fine-tuned to which abnormalities dominate in COVID-19. It's a form of what's called "transfer learning," which is increasingly common in many AI applications.

The program yielded good results, said Tian, but the project remains essentially "scientific research," Tian told ZDNet.

Doing away with human annotations is clever, but it just requires adding more data.

"Due to the variance of the characteristics of COVID-19 in different regions or countries, a larger and prospective dataset from more regions is needed before we develop our research into a commercial diagnostic software," said Tian.

"We are trying our best to optimize our research and validate it in a larger dataset," added Tian. "Consequently, we may not develop this system into commercial use currently."

The world needs to come together

Others have picked up on the lack of data. In a paper last month, scholars from the World Health Organization, the United Nations Global Pulse, and Montreal's Mila institute for AI surveyed the landscape of AI applications, from diagnosis to potential cures, including X-ray and CT scan software.

The authors concluded that "ML [machine learning] and AI can support the response against COVID-19 in a broad set of domains," adding, "However, we note that very few of the reviewed systems have operational maturity at this stage."

Asked why so few programs have reached maturity, Alexandra Luccioni, an author of the paper, and Director of Scientific Projects at Mila, told ZDNet, "It's a matter of sharing data globally."

"Currently, that is not the case, there has not been much global cooperation around global data sharing."

Also: We need a Big Data effort to find a COVID-19 cure, says pioneering geneticist

"I think it would help if the WHO made a central database with de-identifying mechanisms, and some really good encryption," said Dr. Luccioni. "That way, local health authorities would be reassured and motivated to share their data with each other."

"Currently, as far as I know, no such central repository exists," Luccioni added. Luccioni's observation echoes views of other medical and computing professionals who say some kind of "Big Data" initiative is needed to give researchers more to work with.

Left to their own devices, researchers such as Wei Xu of Tsinghua are having to navigate issues of data privacy. "Different countries have different regulations about patient privacy, even for anonymized datasets, but training an AI model require collecting lots of data," Xu remarked to ZDNet. Without any over-arching system to coordinate things, "we are working on privacy-perserving data processing methods (using cryptography techniques) to solve the issue."

Commercial AI plays to its strengths

While academic efforts struggle, some commercial firms a leg up from years spent working with doctors and analyzing scans.

One such company is a three-and-a-half-year-old startup named Qure.ai, which is headquartered in Mumbai, India, with offices in New York, which has received $16 million in venture capital funding from Sequoia Capital and others. Qure.ai has been installing software to analyze X-rays and CTs using AI for almost three years. The company now has 105 sites live in 25 countries around the world, including 35 cites that are using the technology specifically for COVID-19. The COVID-19 installations just got going two weeks ago.

The company's software can be deployed remotely within a matter of hours, which means that lockdowns dont have to impede new sites from going live. The technology can even be used by mobile units that go door-to-door in some of the poorest neighborhoods in the world conducting on-site tests.

Also: Graph theory suggests COVID-19 might be a 'small world' after all

Qure.ai's technology is analyzing 5,000 scans per week, founder and chief executive Prashant Warier told ZDNet in a phone interview, and the company expects that the rate will increase to 5,000 per day in a couple weeks from now.

"We had prepared very well" before COVID-19 entered the picture, Warier told ZDNet. The company's neural networks have been training on two-and-a-half-million sample scans over the past three years, all annotated, for a variety of respiratory issues, including pneumonia, tuberculosis, emphysema, etc. That meant things such as ground-glass opacities "were all already part of our capability" in the computer model, said Warier.

Those with less data will struggle, Warier observed. "Anything that is out there that has 2,000 scans and is trained on a COVID model, it might run very well on a specific dataset, but when you start to generalize to new datasets, it is not going to work very well."

"Generalization is a huge challenge with a lot of different kinds of data from different locations," said Warier.

"We had prepared very well" for COVID-19 by having years of analyzing X-rays, says Qure.ai founder and CEO Prashant Warier. Those with less data will struggle, he predicted.

Qure.ai's system uses convolutional networks, but "we have built a lot of things on top of that," said Warier, including algorithms to automatically detect which parts of the lung are in what areas of the picture. One of the biggest innovations is a natural language processing network that Qure.ai developed that can pick out the words on a scan that can serve as the label. That can reduce the amount of work a human radiologist needs to do to label the image.

Even commercial software needs more data

Despite what Warier claims are very good numbers for accuracy, as well as for "sensitivity" and "specificity" — the number of people diagnosed positive for a disease relative to all who actually have it, and, conversely, the number of people deemed negative relative to all who don't have it — there is still a need for more data to improve results. "We want to improve it, absolutely," says Warier of both measures. While the software's sensitivity and specificity is already very high, says Warier, on the order of 95% for each, there is room for improvement given the diverse ways COVID-19 manifests itself worldwide. "The challenge is that not everyone with an opacity, say, has COVID-19, it could be a bacterial infection," Warier told ZDNet.

There is also what is known as "drift in the data distribution," noted Warier. Different X-ray machines, one from Phillips, another from Fuji, will show images differently. "Each X-ray machine has a different signature," he notes, and "even the settings of a certain kind of machine, it can vary from site to site." Qure.ai even has to differentiate between X-ray machines that take an old-fashioned film picture and those that take a modern digital image (it can handle both types.)

The promise of deep learning forms of artificial intelligence has always been that the computer program finds things humans didn't think of, or didn't know to ask. As Qure.ai moves forward, Warier believes it's possible that the "model might discover something new."

"Maybe the algorithm picks up certain features which a radiologist might not have thought of," he told ZDNet. "The models might learn something more than a radiologist knows."

Let a thousand flowers bloom

Perhaps. In the meantime, given the shortage of data, there is an effort to get tools into the hands of whoever might be able to use AI where they have access to data. That is the insight of Canadian startup DarwinAI, based in Waterloo, Ontario, with roots in the University of Waterloo.

DarwinAI has open-sourced a deep learning model for X-ray analysis called COVID-Net. The convolutional neural network has been developed using 5,941 X-rays from 2,839 patients, data sets of which are also made available online.

The DarwinAI program for chest X-ray analysis, "COVID-Net," can combine with an analysis tool called GSInquire to show which lung areas are being focused on by the neural network, to help physicians understand the network's diagnosis. DarwinAI hopes open-sourcing the system will bring greater collaboration, especially with datasets around the world. "I know a lot of people out there claiming incredible accuracies for AI solutions and I want to make it very clear that we are not," says DarwinAI Chief Scientist, and a co-founder, Alexander Wong.

"I know a lot of people out there claiming incredible accuracies for AI solutions and I want to make it very clear that we are not," DarwinAI's Chief Scientist, and a co-founder, Alexander Wong, told ZDNet. "COVID-Net's goal is to drive innovation towards a clinically-viable solution to help, but right now it is not production-ready and is in its early stages, and we are doing our best to improve it with the rest of the global community towards that goal."

"We want to do it right," he said, "and work with clinical sites and experts to get things right."

In particular, the system needs more data, he said. Based on 500 patient cases so far, COVID-Net has been getting sensitivity and specificity of 80%, so it's missing 20% of those who actually have the disease and mis-classifying 20% of those who are clean, guessing they're diseased.

Wong, who co-authored a pre-print article on the system along with colleague Linda Wang, said he and his team "have been in contact with a number of hospitals and medical facilities to discuss how we can work together to improve the proposed system through a greater amount of data, which is one of the key bottlenecks to implementing the proposed system in practice."

Wong hopes to reach a "milestone" of "at least 500 additional patient cases from which the proposed system will learn from, which we hope will be achieved in the coming months, so that the system will come much closer to clinical use."

As results are improved, a big advantage of COVID-Net, Wong believes, is that it can be "explainable." In their paper, Wong and Wang demonstrated how a tool for "explainable AI" that was developed by DarwinAI, called "GSInquire," can highlight the areas of the image from the X-ray that are driving most of the summaries the neural net creates. That would in theory help a physician see why the program is reaching a certain conclusion, in terms that make sense to the physician.

The way forward

Asked how researchers should proceed given the challenge of amassing data and integrating with physicians' practice, Wong replied, "This may sound cliche, but my advice is to be persistent, focused, work hard, and be open to working with others with greater expertise than you to do better as a collective."

The great rush of effort by AI scientists, and their sudden immersion into a real-world setting with critical demands, cannot help but have a profound effect on the field of AI. Right now, scientists need help with access to data on a much larger scale. To the extent they can get it, it appears AI can have a real impact. How that struggle changes the discipline of AI will be an interesting phenomenon that will unfold in the years to come.