A revered former boss of mine had a proverb to wheel out on the many occasions when our team was called on to do research into an obviously doomed project: “If something isn’t worth doing, it isn’t worth doing well.” It’s hard not to be reminded of that saying when looking at the carnage caused by the application of the Ofqual algorithm to the predicted grades of this year’s A-level students, who were unable to sit exams because of the Covid-19 lockdown.

The problem was fundamentally insoluble, from a mathematical point of view. If the system is dependent on exams to allocate the grades, but it can’t have the exams, then it can’t allocate the grades. No statistical method in the world is going to be able to give you good results if the information you’re looking for is fundamentally not there in the dataset that you’re trying to extract it from. (Hollywood is just wrong on this one when it has people looking at grainy CCTV footage and saying “Let’s enhance.”)

Given the constraint that it was doing an impossible job, Ofqual, the exam regulator for England, did … not very well, to be quite honest. It seems to have been aware that there was no real possibility of giving even reasonably accurate grades to individuals, so instead retreated to a concept of “fair on average” – largely defined in terms of the amount of overall grade inflation it was prepared to tolerate. Fitting grades to a predetermined distribution is a much easier task, so it’s not surprising that it achieved this aim.

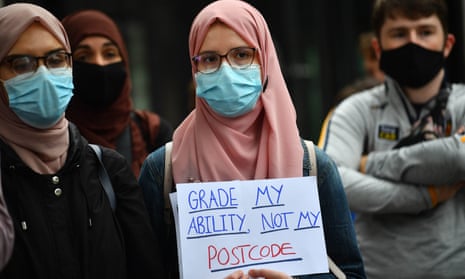

What’s less comprehensible, though, is that to manage this, Ofqual decided to apply its smoothing algorithm most rigorously to the cases where it had the most data, and not at all when there were too few data points to fit the algorithm to. Although that might be a wonk’s idea of a sensible estimation process, a moment’s reflection should have had someone involved saying something like: “Doesn’t that mean the algorithm is going to apply to big centres (like FE colleges) but not small centres (like public schools), which is a problem if it’s pulling the grades down a lot?” It’s just not good enough, if you’re so concerned with maintaining consistent outcomes from year to year, to tolerate inconsistent outcomes with such an obvious socioeconomic bias.

But even if, impossibly, all the problems had been solved, the Ofqual report on its own methodology gives the game away with respect to a much more serious issue. If there had been a perfect solution to the problem of a pandemic-hit examination process, so that every candidate was given exactly the grade that they would have got in an exam, how fair would this be? Turn to page 81 to see the answer and weep. In any subject other than maths, physics, chemistry, biology or psychology, there’s no better than a 70% chance that two markers of the same paper would agree on the grade to be assigned. Anecdotally, by the way, “two markers” in this context might mean “the same marker, at two different times”. For large numbers of pupils over the past several decades, it’s quite possible that grading differences at least as large as those produced by the Ofqual algorithm could have been introduced simply because your paper was in the pile that got marked before, rather than after, the marker had a pleasant lunch.

This is really bad. Very few industrial processes would tolerate this level of test/retest inaccuracy – a medical trial would have next to no chance of getting approval. Almost incomprehensibly, Ofqual seems to regard it as a reason for not worrying too much about this year’s algorithm, rather than a reason for thinking that the whole process is horribly flawed. Particularly at the cusp of pass versus fail (and particularly in GCSE English, where not having the qualification really does limit your life choices), this isn’t the sort of situation where you can tolerate this kind of inaccuracy.

Which raises the question: when we see a big gap between the teachers’ predicted grades, and the “adjusted” versions meant to bring those grades into line with previous years’ exam results, why would we necessarily believe that it was the teachers who were wrong and the exam results that were right?

Exam results are not by any means a fact of nature. Everyone knows they’re affected by things as diverse as the pollen count and the date of Ramadan. Reducing the importance of coursework was a measure that was never particularly well-justified by evidence, and dismissing the teacher assessments as vulnerable to fraud is a bit of a slur on professional integrity. Coronavirus has demonstrated how fragile an exam-based system can be in a crisis, but it’s also given us some telling insight into whether high-stakes examinations are any use at all.